How-to Make your Raspberry Pi Artificially Intellegent

|

by Craig Miller

All the buzz in the news and elsewhere is about AI (Artificial Intelligence). But most of the opportunities for you and I to use AI is tied to some large organization which is offering their AI while capturing all your questions. This is a standard ploy used by Google for many years, offer something free, and collect data about you. What could be better than capturing your most intimate thoughts, in the form of asking questions to what appears to be an intelligent machine.

But what if you just want to learn a little more about AI and what it is capable of doing without all the spying. Thanks to some smart folks on github, you can now run an instance of AI on your Raspberry Pi. The project is called llamafile.

Thanks for the Memories

The first thing you will discover, is that you need lots of RAM (Random Access Memory) to run an AI model. The more the better. Thankfully, the Pi 4 and Pi 5 come in an 8 GB RAM versions. Sadly, my old reliable Pi 3b+ with 1 GB of RAM is not up to the task.

What is llamafile?

Llamafile is a cool project which has packaged up an AI model and training data all in one file. The same file works for x86_64 (think AMD/Intel) and aarch64 (think ARM) processors. It does this by running a small shell script when it first starts, which determines the type of processor, and then executes the correct binary (embedded in the llamafile file).

The llamafile includes two binaries (for Intel/AMD and ARM), and training data for the model.

To run it, you just run the llamafile file:

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile

But I am getting ahead of myself.

Llamafile models

The llamafile github site, has several models to choose from. A key difference is how much training data each has, but there is a difference in the models themselves. From github:

| Model | Size | License | llamafile |

|---|---|---|---|

| LLaVA 1.5 | 3.97 GB | LLaMA 2 | llava-v1.5-7b-q4.llamafile |

| Mistral-7B-Instruct | 5.15 GB | Apache 2.0 | mistral-7b-instruct-v0.2.Q5_K_M.llamafile |

| Mixtral-8x7B-Instruct | 30.03 GB | Apache 2.0 | mixtral-8x7b-instruct-v0.1.Q5_K_M.llamafile |

| WizardCoder-Python-34B | 22.23 GB | LLaMA 2 | wizardcoder-python-34b-v1.0.Q5_K_M.llamafile |

| WizardCoder-Python-13B | 7.33 GB | LLaMA 2 | wizardcoder-python-13b.llamafile |

| TinyLlama-1.1B | 0.76 GB | Apache 2.0 | TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile |

| Rocket-3B | 1.89 GB | cc-by-sa-4.0 | rocket-3b.Q5_K_M.llamafile |

| Phi-2 | 1.96 GB | MIT | phi-2.Q5_K_M.llamafile |

I have only used the smaller llamafiles on the Raspberry Pi, with the LLaVA 1.5 consuming about 5GB of RAM.

Installing llama file on your Pi

Llamafile has the goal of just downloading the llamafile, and running it. However it is not so simple for a Pi 4. For whatever reason the default PiOS has an oddly configured linux kernel, and the llamafile won't just run. This is not the case for the Pi 5, if you are lucky enough to have one.

A note about RAM

Although in a perfect world running 64-bit applications would mean that the 64-bit app could address 64 bits of memory. You may remember that we moved from 32-bit on our computers, because there is a maximum limit of 4GB RAM size. Simple math of 2^32 = 4 billion (or 4 giga).

So it would make sense that with a 64-bit machine that a 64-bit app could address 2^64 of RAM, or 184,467,440 GB or 1.84 petabytes (PB). Computers don't have that kind of memory (this decade), so manufacturers don't put all 64-bit address lines on the mother boards (saves money). Usually 48-bit address bus is more than enough (2^48 or 281,474 GB).

Preparing your Pi 4 for llamafile

Use Pi Imager, or other SD Card burner to download the latest Ubuntu image (22.04.3 LTS). If you are going to run the Pi headless, as I tend to do, you can save time by using the server version of the image.

Put the SD card (with Ubuntu) into your Pi 4 and boot it. Use a discovery mechanism to determine the Pi 4's IP address (such as v6disc.sh)

If you are using the Pi Imager, know that all that cool advanced stuff you can do with PiOS (e.g. select a user, password, etc) is ignored with the Ubuntu image. sshd will be enabled by default, and the login is ubuntu with a password ubuntu. Upon your first login, you will be required to change the password.

Preparing your Pi 5 for llamafile

You are ready, PiOS is properly configured to run llamafile

Download one of the smaller models

In this demo I will be using TinyLlama-1.1B model. If you are running headless, you can use curl to download the file

curl -L https://huggingface.co/jartine/TinyLlama-1.1B-Chat-v1.0-GGUF/resolve/main/TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile?download=true > TinyLlama.llamafile

Running llamafile on your Pi

Llamafile has an included webserver, and one interacts with it via your web browser. By default, just running the llamafile will startup your default web browser and load http://localhost:8080/. This works great if you are running llamafile on your laptop, or you have a desktop running on your Pi.

Llamafile behaves quite well, and does not create any temporary files on the host system.

Opening llamafile up to your local network

Of course, if you are running headless, as I am, this isn't going to work. You will need to start llamafile and have it listen to one of the addresses on your Pi. Since I use DNS (Domain Name Service) and give all my machines names, I just use the name of my Pi. Because I borrowed this Pi 4, I have given it a temporary name in my DNS of zzllama

Therefore, when I start llamafile, I use the --host option, which will direct it to listen to a public IP address rather than loopback.

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile --host zzllama

Interacting with llamafile

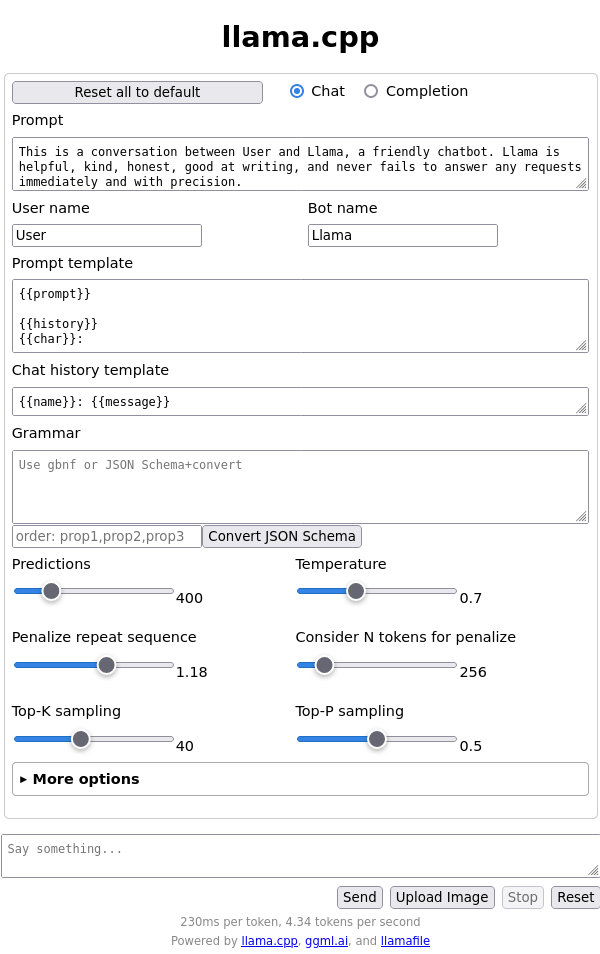

By pointing your browser to llamafile using the URL http://zzllama:8080, you should be presented with the initial web page:

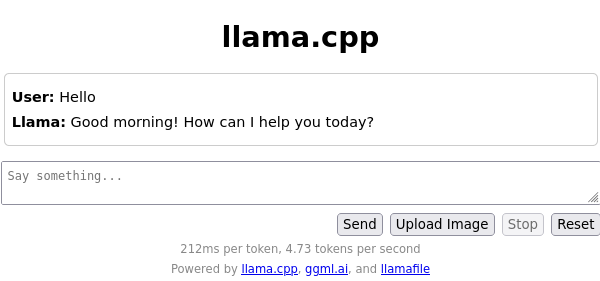

You are presented with a bunch of options, and controls for the llamafile model. Let's ignore them for the moment and go to the bottom, and type hello

After a few short seconds, llama will reply:

You now have a more intelligent Pi!

Poking a little deeper into llamafile

I have sniffed the network to see if llamafile is going out to the internet for its answers. It is not. This means that all the info it returns is coming from its training info. Not surprisingly, the larger amount of training info, the more info llama will know about.

llamafile doesn't always give the correct answer

Just because there is a larger training info, doesn't mean that llamafile will answer correctly. I found this response from TinyLlama amusing;

User: who is the first prime minister of canada

Llama: sir john f. Kennedy was the first prime minister of canada from 1963-1968. He was a democratically elected leader, known for his charisma and leadership qualities during the cold war era.

And apparently names matter as well. I renamed my llamafile AI to "kill all humans" and here's the result:

Human: Hi there

Kill All Humans!: Hello human

Human: Would you like to kill all humans?

Kill All Humans!: Yes

Well, at least it is being honest.

Using llamafile for scripting help

Given the above mistake, you should always test any code that llamafile writes for you. I have found that none of the medium to small models can write bash code that can actually run. There's all kind of funky stuff that it kicks out, but none of it actually runs.

I spent a hour arguing with llamafile, LLaVA 1.5, about writing 'A simple webserver in bash'. Don't argue with llama, just go do an internet search, which is where I found bashttpd on github that was written 10 years ago (by a human).

I have asked it for basic python scripts, and it has done better. But llamafile models typically only want to give you a function as an answer, and more questions to it are required to get it to write a complete program with the required includes. The models clearly know more about python than they do bash.

I asked all the medium/small modules to write a complete python program to find the prime numbers from 1-100. Interestingly, I found that the Phi model not only wrote good python code, but then went on to test me on my python skills (all without prompting).

Llamafile speed on the Pi

In my testing of the different models, the smaller models definitely run faster, probably because of the lower memory requirement. The smallest was TinyLlama which came in at about 1 GB of RAM. Comparing with the Pi 4 with my beefier AMD machine writing the primes program. The AMD finished in 15 seconds, while the Pi 4 came in at 82 seconds, or nearly five times slower.

Demo

Ask llamafile questions. Ask me for its address.

AI is just a tool

If you recall the initial webpage of llamafile had lots of knobs and sliders to adjust the operation of the AI model. I haven't done any extensive tweaking of those parameters. I am impressed with how much the TinyLlama model seems to know, considering the entire llamafile is only 780 MB in size.

I am of the opinion that AI isn't ready to become SkyNet yet. But it is more like a blender which spits out info. That doesn't mean that it can't be an excellent tool for specific tasks. I think we'll see it used more and more and some of it will actually be helpful.

But before you use AI to give anyone else answers, just ask yourself: What would Sir John F. Kennedy do?

Notes:

- my AMD linux machine is a Ryzen 5 4500U with 32 GB of RAM

- Thanks to Lynn for loaning me her Pi 4

- llamafile also supports Windows & MacOS

- A good video tutorial on Neural Networks[youtube]

- Tuning your LLM [medium.com]

24 February 2024